Known issues#

We are aware of the following issues using Anaconda Enterprise. If you’re experiencing other unexpected behavior, consider checking our Support Knowledge Base.

Project is stuck in loading state on creation when email address is used as username#

You cannot use an email address for a username in Anaconda Enterprise. If your username is an email address and you attempt to create a project, it will appear to be stuck in a loading state. The project will disappear when you refresh the page.

Workaround

Log in to your Keycloak admin console and update the username to some other entry that is not an email address. Usernames cannot be all numeric entries.

Subsequent Jobs using the “Run Now” schedule do not consume GPU resources#

When scheduling a job using the “Run Now” schedule, the first run will consume GPU resources if a GPU resource profile is used. Each subsequent job after the first will not consume a GPU resource when the Run schedule button is used.

Please note that this only occurs when the Run schedule button is selected multiple times for a job that is scheduled using the “Run Now” schedule. This does not affect any job scheduled with a cron expression.

Workaround

Do not run the same job multiple times on the “Run Now” schedule, using the Run schedule button. Instead, schedule the job with a cron expression.

Unable to obtain Zeppelin credentials#

After selecting Credential and clicking the question mark icon in the Zeppelin editor, the user should be redirected to Zeppelin documentation explaining the process for obtaining credentials. However, that link is broken.

Workaround

Rather than committing something sensitive in your code/repository through Zeppelin, create a Kubernetes secret in JSON format.

Attempting to install new PyViz packages in JupyterLab results in error#

The new PyViz libraries aren’t compatible with the version of JupyterLab used in Anaconda Enterprise. For more information on PyViz compatibility, see pyviz/pyviz_comms.

Workaround

Open the project in Jupyter Notebook.

Unable to download files when running JupyterLab in Chrome browser#

If you attempt to download a file from within a JupyterLab project running on Chrome, you may see a Failed/Forbidden error, preventing you from being unable to download the file.

Workaround

Open the project in Jupyter Notebook or another supported browser, such as Firefox or Safari, and download the file.

Unexpected metadata in a package breaks AE channel#

The cspice and spiceypy packages mirrored from conda-forge include incompatible metadata, which causes a channeldata.json build failure, and makes the entire channel inaccessible.

Workaround

Remove these packages from the AE channel, or update your conda-forge mirror to pull in the latest packages.

Custom conda configuration file may be overwritten#

If you add a custom .condarc file to your project using the anaconda-enterprise-cli spark-config command, it may get overwritten with the default config options when you deploy the project.

Workaround

Place the .condarc file in a directory other than your home directory (/opt/continuum/.condarc).

Note that the conda config settings are loaded from all of the files on the conda config search path. The config settings are merged together, with keys from higher priority files taking precedence over keys from lower priority files. If you need extra settings, start by adding the .condarc file to a lower priority file first and see if this works for you.

For more information on how directory locations are prioritized, see this blog post.

Starting in Anaconda Enterprise 5.3.1, you can also set global config variables via a config map, as an alternative to using the AE CLI.

Incorrect information in command output#

When running the anaconda-enterprise-cli spark-config command to connect to a remote Hadoop Spark cluster from within a project, the output says you need to specify the namespace by including -n anaconda-enterprise.

Workaround

You must omit -n anaconda-enterprise from the command, as AE is installed in the default namespace.

Error creating an environment immediately after installation#

At least one project must exist on the platform before you can create an environment. If you attempt to create an environment first, the logs will say that the associated job is running, and the container isn’t ready.

Workaround

Create a project first. The environment creation process will continue and successfully complete after a few minutes.

Cluster performance may degrade after extended use#

The default limit for max_user_watches may be insufficient, and can be increased to improve cluster longevity.

Workaround

Run the following command on each node in the cluster, to help the cluster remain active:

sysctl -w fs.inotify.max_user_watches=1048576

To ensure this change persists across reboots, you’ll also need to run the following command:

sudo echo -e "fs.inotify.max_user_watches = 1048576" > /etc/sysctl.d/10-fs.inotify.max_user_watches.conf

Invalid issuer URL causes library to get stuck in a sync loop#

When using the Anaconda Enterprise Operations Center to create an OIDC Auth Connector, if you enter an invalid issuer url in the spec, the go-oidc library can get stuck in a sync loop. This will affect all connectors.

Workaround

On a single node cluster, you’ll need to do the following shut down gravity:

Find the gravity services:

systemctl list-units | grep gravity.You will see output like this:

# systemctl list-units | grep gravity gravity__gravitational.io__planet-master__0.1.87-1714.service loaded active running Auto-generated service for the gravitational.io/planet-master:0.1.87-1714 package gravity__gravitational.io__teleport__2.3.5.service loaded active running Auto-generated service for the gravitational.io/teleport:2.3.5 package

Shut down the

teleportservice:systemctl stop gravity__gravitational.io__teleport__2.3.5.service

Shut down the

planet-masterservice:systemctl stop gravity__gravitational.io__planet-master__0.1.87-1714.service

On a multi-node cluster, you’ll need to shut down gravity AND all gravity-site pods:

kubectl delete pods -n kube-system gravity-site-XXXXX

In both cases, you’ll need to restart gravity services:

systemctl start gravity__gravitational.io__planet-master__0.1.87-1714.service

systemctl start gravity__gravitational.io__teleport__2.3.5.service

GPU affinity setting reverts to default during upgrade#

When upgrading Anaconda Enterprise from a version that supports the ability to reserve GPU nodes to a newer version (e.g., 5.2.x > 5.2.3), the nodeAffinity setting reverts to the default value, thus allowing CPU sessions and deployments to run on GPU nodes.

Workaround

If you had commented out the nodeAffinity section of the Config map in your previous installation, you’ll need to do so again after completing the upgrade process. See Setting resource limits for more information.

Install and post-install problems#

Failed installations

If an installation fails, you can view the failed logs as part of the support bundle in the failed installation UI.

After executing sudo gravity enter you can check /var/log/messages to

troubleshoot a failed installation or these types of errors.

After executing sudo gravity enter you can run journalctl to look at

logs to troubleshoot a failed installation or these types of errors:

journalctl -u gravity-23423lkqjfefqpfh2.service

Note

Replace gravity-23423lkqjfefqpfh2.service with the name of your gravity service.

You may see messages in /var/log/messages related to errors such as

“etcd cluster is misconfigured” and “etcd has no leader” from one of the

installation jobs, particularly gravity-site. This usually indicates that

etcd needs more compute power, needs more space or is on a slow disk.

Anaconda Enterprise is very sensitive to disk latency, so we usually recommend

using a better disk for /var/lib/gravity on target machines and/or putting

etcd data on a separate disk. For example, you can mount etcd under

/var/lib/gravity/planet/etcd on the hosts.

After a failed installation, you can uninstall Anaconda Enterprise and start over with a fresh installation.

Failed on pulling gravitational/rbac

If the node refuses to install and fails on pulling gravitational/rbac, create

a new directory TMPDIR before installing and provide write access

to user 1000.

“Cannot continue” error during install

This bug is caused by a previous failure of a kernel module check or other preflight check and subsequent attempt to reinstall.

Stop the install, make sure the preflight check failure is resolved, and restart the install again.

Problems during post-install or post-upgrade steps

Post-install and post-upgrade steps run as Kubernetes jobs. When they finish running, the pods used to run them are not removed. These and other stopped pods can be found using:

kubectl get pods -A

The logs in each of these three pods will be helpful for diagnosing issues in the following steps:

Pod |

Issues in this step |

|---|---|

|

post-install UI |

|

installation step |

|

post-update steps |

Post-install configuration doesn’t complete

After completing the post-install steps, clicking FINISH SETUP may not close the screen, and prevent you from continuing.

You can complete the process by running the following commands within gravity.

To determine the site name:

SITE_NAME=$(gravity status --output=json | jq '.cluster.token.site_domain' -r)

To complete the post-install process:

gravity --insecure site complete

Re-starting the post-install configuration

In order to reinitialize the post-install configuration UI—to regenerate temporary (self-signed) SSL certificates or reconfigure the platform based on your domain name—you must re-create and re-expose the service on a new port.

First, export the deployment’s resource manifest:

helm template --name anaconda-enterprise /var/lib/gravity/local/packages/unpacked/gravitational.io/AnacondaEnterprise/5.X.X/resources/Anaconda-Enterprise/ -x /var/lib/gravity/local/packages/unpacked/gravitational.io/AnacondaEnterprise/5.X.X/resources/Anaconda-Enterprise/templates/wagonwheel.yaml > wagon.yaml

Edit wagon.yaml, replacing image: ae-wagonwheel:5.X.X with image: leader.telekube.local:5000/ae-wagonwheel:5.X.X

Then recreate the ae-wagonwheel deployment using the updated YAML file:

kubectl create -f /var/lib/gravity/site/packages/unpacked/gravitational.io/AnacondaEnterprise/5.X.X/resources/wagon.yaml -n kube-system

NOTE: Replace 5.X.X with your actual version number.

To ensure the deployment is running in the system namespace, execute sudo gravity enter and run:

kubectl get deploy -n kube-system

One of these should be ae-wagonwheel, the post-install configuration UI. To make this visible to the outside world, run:

kubectl expose deploy ae-wagonwheel --port=8000 --type=NodePort --name=post-install -n kube-system

This will run the UI on a new port, allocated by Kubernetes, under the name post-install.

To find out which port it is listening under, run:

kubectl get svc -n kube-system | grep post-install

Then navigate to http://<your domain>:<this port> to access the post-install UI.

Kernel parameters may be overwritten and cause networking errors#

If networking starts to fail in Anaconda Enterprise, it may be because a kernel parameter related to networking was inadvertently overwritten.

Workaround

On the master node running AE, run gravity status and verify that all kernel parameters are set correctly. If the Status for a particular parameter is degraded, follow the instructions here to reset the kernel parameter.

Removing collaborator from project with open session generates error#

If you remove a collaborator from a project while they have a session open for that project, they might see a 500 Internal Server Error message.

Workaround

Add the user as a collaborator to the project, have them stop their notebook session, then remove them as a collaborator. For more information, see how to share a project.

To prevent collaborators from seeing this error, ask them to close their running session before you remove them from the project.

Affected versions

5.2.x

AE auth pod throws OutOfMemory Error#

If you see an exception similar to the following, Anaconda Enterprise has exceeded the maximum heap size for the JVM:

Exception: java.lang.OutOfMemoryError thrown from the UncaughtExceptionHandler in thread "default task-248"

2018-08-29 23:13:26.327 UTC ERROR XNIO001007: A channel event listener threw an exception: java.lang.OutOfMemoryError: Java heap space (default I/O-36) [org.xnio.listener]

2018-08-29 23:12:32.823 UTC ERROR UT005023: Exception handling request to /auth/realms/AnacondaPlatform/protocol/openid-connect/token: java.lang.OutOfMemoryError: Java heap space (default task-86) [io.undertow.request]

2018-08-29 23:13:01.353 UTC ERROR XNIO001007: A channel event listener threw an exception: java.lang.OutOfMemoryError: Java heap space

Workaround

Increase the JVM max heap size by doing the following:

Open the

anaconda-enterprise-ap-authdeployment spec by running the following command in a terminal:$ kubectl edit deploy anaconda-enterprise-ap-auth

Increase the value for

JAVA_OPTS(example below):spec: containers: - args: - cp /standalone-config/standalone.xml /opt/jboss/keycloak/standalone/configuration/ && /opt/jboss/keycloak/bin/standalone.sh -Dkeycloak.migration.action=import -Dkeycloak.migration.provider=singleFile -Dkeycloak.migration.file=/etc/secrets/keycloak/keycloak.json -Dkeycloak.migration.strategy=IGNORE_EXISTING -b 0.0.0.0 command: - /bin/sh - -c env: - name: DB_URL value: anaconda-enterprise-postgres:5432 - name: SERVICE_MIGRATE value: auth_quick_migrate - name: SERVICE_LAUNCH value: auth_quick_launch - name: JAVA_OPTS value: -Xms64m -Xmx2048m -XX:MetaspaceSize=96M -XX:MaxMetaspaceSize=256m

Affected versions

5.2.1

Fetch changes behavior in Apache Zeppelin may not be obvious to new users#

A Fetch changes notification appears, but the changes do not get applied to the editor. This is how Zeppelin works, but users unfamiliar with the editor may find it confusing.

If a collaborator makes changes to a notebook that’s also open by another user, the user needs to pull the changes that the collaborator made AND click the small reload arrows to refresh their notebook with the changes (see below).

Affected versions

5.2.2

Apache Zeppelin can’t locate conflicted files or non-Zeppelin notebook files#

If you need to access files other than Apache Zeppelin notebooks within a project, you can use the %sh interpreter from within a Zeppelin notebook to work with files via bash commands, or use the Settings tab to change the default editor to Jupyter Notebooks or JupyterLab and use the file browser or terminal.

Affected versions

5.2.2

Updating a package from the Anaconda metapackage#

When updating a package dependency of a project, if that dependency is part of

the Anaconda metapackage the package will be installed once but a subsequent

anaconda-project call will uninstall the upgraded package.

Workaround

When updating a package dependency remove the anaconda metapackage from the

list of dependencies at the same time add the new version of the dependency that

you want to update.

Affected versions

5.1.0, 5.1.1, 5.1.2, 5.1.3

File size limit when uploading files#

Unable to upload new files inside of a project that are larger than the current restrictions:

The limit of file uploads in JupyterLab is 15 MB

Affected versions

5.1.0, 5.1.1, 5.1.2, 5.1.3, 5.2.0, 5.2.1, 5.2.2, 5.2.3

IE 11 compatibility issue when using Bokeh in projects (including sample projects)#

Bokeh plots and applications have had a number of issues with Internet Explorer 11, which typically result in the user seeing a blank screen.

Workaround

Upgrade to the latest version of Bokeh available. On Anaconda 4.4 the latest is 0.12.7. On Anaconda 5.0 the latest version of Bokeh is 0.12.13. If you are still having issues, consult the Bokeh team or support.

Affected versions

5.1.0, 5.1.1, 5.1.2, 5.1.3

IE 11 compatibility issue when downloading custom Anaconda installers#

Unable to download a custom Anaconda installer from the browser when using Internet Explorer 11 on Windows 7. Attempting to download a custom installer with this setup will result in an error that “This page can’t be displayed”.

Workaround

Custom installers can be downloaded by refreshing the page with the error message, clicking the “Fix Connection Error” button, or using a different browser.

Affected versions

5.1.0, 5.1.1, 5.1.2, 5.1.3

Project names over 40 characters may prevent JupyterLab launch#

If a project name is more than 40 characters long, launching the project in JupyterLab may fail.

Workaround

Rename the project to a name less than 40 characters long and launch the project in JupyterLab again.

Affected versions

5.1.1, 5.1.2, 5.1.3

Long-running jobs may falsely report failure#

If a job (such as an installer, parcel, or management pack build) runs for more than 10 minutes, the UI may falsely report that the job has failed. The apparent job failure occurs because the session/access token in the UI has expired.

However, the job will continue to run in the background, the job run history will indicate a status of “running job” or “finished job”, and the job logs will be accessible.

Workaround

To prevent false reports of failed jobs from occurring in the UI, you can extend the access token lifespan (default: 10 minutes).

To extend the access token lifespan, log in to the Anaconda Enterprise Authentication Center, navigate to Realm Settings > Tokens, then increase the Access Token Lifespan to be at least as long as the jobs being run (e.g., 30 minutes).

Affected versions

5.1.0, 5.1.1, 5.1.2, 5.1.3

New Notebook not found on IE11#

On Internet Explorer 11, creating a new Notebook in a Classic Notebook editing session may produce the error “404: Not Found”. This is an artifact of the way that Internet Explorer 11 locates files.

Workaround

If you see this error, click “Back to project”, then click “Return to Session”. This refreshes the file list and allows IE11 to find the file. You should see the new notebook in the file list. Click on it to open the notebook.

Affected versions

5.0.4, 5.0.5

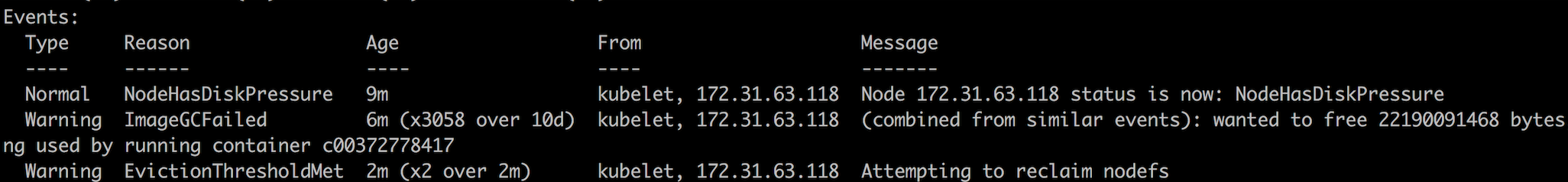

Disk pressure errors on AWS#

If your Anaconda Enterprise instance is on Amazon Web Services (AWS), overloading the system with reads and writes to the directory /opt/anaconda can cause disk pressure errors, which may result in the following:

Slow project starts.

Project failures.

Slow deployment completions.

Deployment failures.

If you see these problems, check the logs to verify whether disk pressure is the cause:

To list all nodes, run:

kubectl get node

Identify which node is experiencing issues, then run the following command against it, to view the log for that node:

kubectl describe node <master-node-name>

If there is disk pressure, the log will display an error message similar to the following:

Workaround

To relieve disk pressure, you can add disks to the instance by adding another Elastic Block Store (EBS) volume. If the disk pressure is being caused by a back up, you can move the backed up file somewhere else (e.g., to an NFS mount). See Backing up and restoring AE for more information.

To add disks to the instance by adding another Elastic Block Store (EBS) volume.

Open the AWS console and add a new EBS volume provisioned to 3000 IOPS. A typical disk size is 500 GB.

Attach the volume to your AE 5 master.

To find your new disk’s name run

fdisk -l. Our example disk’s name is/dev/nvme1n1. In the rest of the commands on this page, replace/dev/nvme1n1with your disk’s name.Format the new disk:

fdisk /dev/nvme1n1To create a new partition, at the first prompt press

nand then the return key.Accept all default settings.

To write the changes, press

wand then the return key. This will take a few minutes.To find your new partition’s name, examine the output of the last command. If the name is not there, run

fdisk -lagain to find it.Our example partition’s name is

/dev/nvme1n1p1. In the rest of the commands on this page, replace/dev/nvme1n1p1with your partition’s name.Make a file system on the new partition:

mkfs /dev/nvme1n1p1Make a temporary directory to capture the contents of

/opt/anaconda:mkdir /opt/aetmpMount the new partition to

/opt/aetmp:mount /dev/nvme1n1p1 /opt/aetmpShut down the Kubernetes system.

Find the gravity services:

systemctl list-units | grep gravityYou will see output like this:

# systemctl list-units | grep gravity gravity__gravitational.io__planet-master__0.1.87-1714.service loaded active running Auto-generated service for the gravitational.io/planet-master:0.1.87-1714 package gravity__gravitational.io__teleport__2.3.5.service loaded active running Auto-generated service for the gravitational.io/teleport:2.3.5 package

Shut down the

teleportservice:systemctl stop gravity__gravitational.io__teleport__2.3.5.serviceShut down the

planet-masterservice:systemctl stop gravity__gravitational.io__planet-master__0.1.87-1714.serviceCopy everything from

/opt/anacondato/opt/aetmp:rsync -vpoa /opt/anaconda/* /opt/aetmpInclude the new disk at the

/opt/anacondamount point by adding this line to your file systems table at/etc/fstab:/dev/nvme1n1p1 /opt/anaconda ext4 defaults 0 0

Use mixed spaces and tabs in this pattern:

/dev/nvme1n1p1<tab>/opt/anaconda<tab>ext4<tab>defaults<tab>0<space>0Move the old

/opt/anacondaout of the way to/opt/anaconda-old:mv /opt/anaconda /opt/anaconda-oldIf you’re certain the

rsyncwas successful, you may instead delete/opt/anaconda:rm -r /opt/anacondaUnmount the new disk from the

/opt/aetmpmount point:umount /opt/aetmpMake a new

/opt/anacondadirectory:mkdir /opt/anacondaMount all the disks defined in

fstab:mount -aRestart the gravity services:

systemctl start gravity__gravitational.io__planet-master__0.1.87-1714.service systemctl start gravity__gravitational.io__teleport__2.3.5.service

Disk pressure error during backup#

If a disk pressure error occurs while backing up your configuration, the amount of data being backed up has likely exceeded the amount of space available to store the backup files. This triggers the Kubernetes eviction policy defined in the kubelet startup parameter and causes the backup to fail.

To check your eviction policy, run the following commands on the master node:

sudo gravity enter

systemctl status | grep "/usr/bin/kubelet"

Workaround

Restart the backup process, and specify a location with sufficient space (e.g., an NFS mount) to store the backup files. See Backing up and restoring AE for more information.

General diagnostic and troubleshooting steps#

Entering Anaconda Enterprise environment

To enter the Anaconda Enterprise environment and gain access to kubectl and

other commands within Anaconda Enterprise, use the command:

sudo gravity enter

Moving files and data

Occasionally you may need to move files and data from the host machine to the Anaconda Enterprise environment. If so, there are two shared mounts to pass data back and forth between the two environments:

host:

/opt/anaconda/-> AE environment:/opt/anaconda/host:

/var/lib/gravity/planet/share-> AE environment:/ext/share

If data is written to either of the locations, that data will be available on both the host machine and within the Anaconda Enterprise environment

Debugging

AWS Traffic needs to handle the public IPs and ports. You should either use a canonical security group with the proper ports opened or manually add the specific ports listed in Network Requirements.

Problems during air gap project migration

The command anaconda-project lock over-specifies the channel list resulting in a conda bug where it adds defaults from the internet to the list of channels.

Solution:

Add to the .condarc: “default_channels”. This way, when conda adds “defaults” to the command it is adding the internal repo server and not the repo.continuum.io URLs.

EXAMPLE:

default_channels:

- anaconda

channels:

- our-internal

- out-partners

- rdkit

- bioconda

- defaults

- r-channel

- conda-forge

channel_alias: https://:8086/conda

auto_update_conda: false

ssl_verify: /etc/ssl/certs/ca.2048.cer

LDAP error in ap-auth

[LDAP: error code 12 - Unavailable Critical Extension]; remaining name 'dc=acme, dc=com'

This error can be caused when pagination is turned on. Pagination is a server side extension and is not supported by some LDAP servers, notably the Sun Directory server.

Session startup errors

If you need to troubleshoot session startup, you can use a terminal to view the

session startup logs. When session startup begins the output of the

anaconda-project prepare command is written to /opt/continuum/preparing,

and when the command completes the log is moved to

/opt/continuum/prepare.log.