Managing cluster resources#

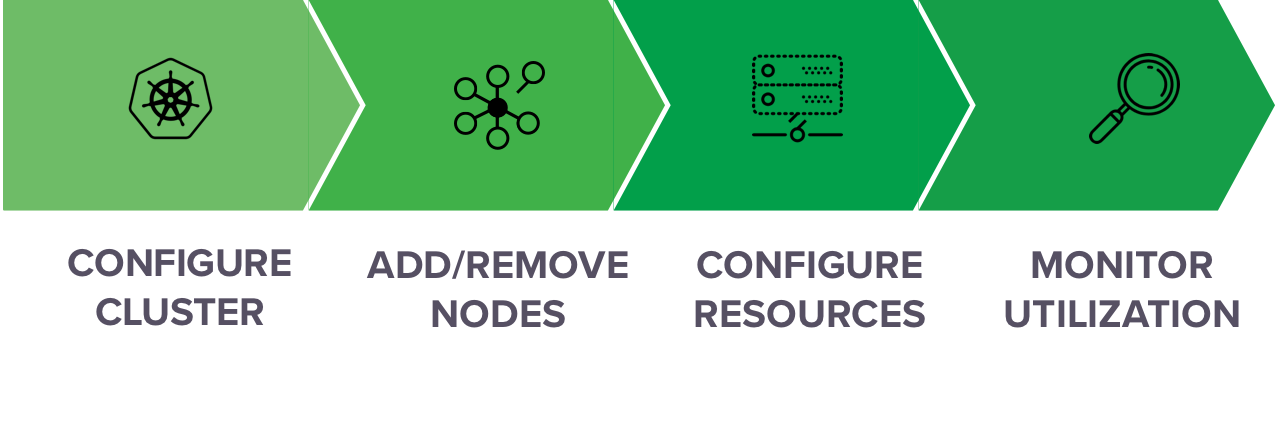

After you’ve installed an Data Science & AI Workbench cluster, you’ll need to continue managing and monitoring the cluster to ensure that it scales with your organization. These on-going tasks include:

Adding nodes: If you outgrow your initial Workbench cluster installation, you can always add nodes to your cluster—including GPUs. Once added, make these nodes available to platform users by configuring resource profiles.

Monitoring and managing resources: To help you manage your organization’s cluster resources more efficiently, Workbench enables you to monitor which sessions and deployments are running on specific nodes or by specific users. You can also monitor cluster resource usage in terms of CPU, memory, disk space, network, and GPU utilization.

Troubleshooting and debugging: To help you gain insights into user services and troubleshoot issues, Workbench provides detailed logs and debugging information related to the Kubernetes services it uses, as well as all activity performed by users. See fault tolerance in Workbench for information about what to do if a master node fails.